No More Hustleporn: Why TensorFlow Is The Way It Is

Tweet by Tom Goldstein

https://twitter.com/tomgoldsteincs

Associate Professor at Maryland. I want my group to do theory and scientific computing but my students don’t listen to me so I guess we do deep learning 🤷♂️

If you want to understand why TensorFlow is the way it is, you have to go back to the ancient times. In 2012, Google created a system called DistBelief that laid out their vision for how large-scale training would work. It was the basis for TF. 🧵

research.google/pubs/pub40565/

Large Scale Distributed Deep Networks – Google Research

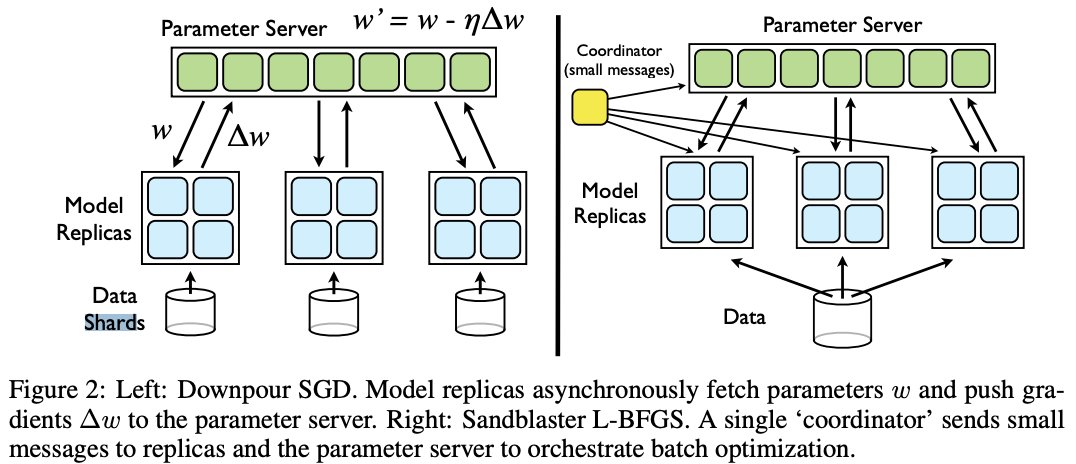

In DistBelief, both models and datasets were split across nodes. Worker nodes update only a subset of parameters at a time, and communicate parameters asyncronously to a "parameter server". A "coordinator" orchestrates the independent model, data, and parameter nodes.

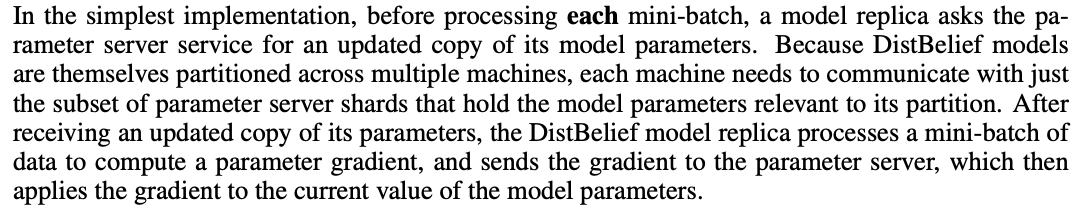

Here's a description of the *simplest* operation mode of this system, taken directly from Jeff's paper.

TensorFlow was built to support this kind of highly distributed, asynchronous training involving heterogenous nodes and architectures. But why?

Historically, parallel scientific computing and optimization were done using very simple, synchronous paradigms like MPI running on homogenous systems. But around 2010 there was an influx of people from the web services community (including Google) into ML.

Web services historically use asynchronous, highly distributed paradigms and heterogenous architectures. This caused a big a big hype around asynchronous and heterogenous computation for ML, with new www-inspired paradigms like "parameter servers" becoming popular.

In the end, the web paradigm didn't make sense for scientific computing applications, which can exploit the efficiency and simplicity of synchronous clusters. The good performance of DistBelief was considered non-reproducible, and simple synchronous routines surpassed it.

Frameworks like PyTorch came along with the idea of targeting just the simplest parallel training methods, and supporting them well, rather than trying to support everything like TF. In the end it's a tradeoff. TF does support everything, but at the cost of higher complexity.